The Legal Ambiguity of Copilot

If you’re involved with an open source community, the chances that you have heard of open source licenses are pretty high. Open source licenses have the important role of defining what others may or may not do with the work in terms of using, modifying, or distributing software code of the open source project. There are a variety of open source licenses, each with different terms and flexibility regarding what one can do with the project’s code. One of the most general and popular concepts of open source licensing are copyleft licenses. Generally, copyleft licenses allow others to use, modify and distribute work under the condition that any derived works are also under the same conditions.

In recent years, GitHub has developed an artificial intelligence tool called Copilot that is an “AI pair programmer” that generates code and coding suggestions based on a user’s natural language input. The goal of Copilot is to make coding easier for the user and save them time on reading documentation. According to this article by the Software Freedom Conservancy, “Copilot was trained on ‘billions of lines of public code…written by others” from numerous GitHub repositories. A controversy has arisen as GitHub has refused to release a list of the repositories used in the training set, and it has been confirmed that copylefted code appears in the training set. Consequently, many people are questioning the legal ambiguity of GitHub’s actions and believe that since copylefted code was used in the training process, Copilot should also be an open source, copylefted project. Contrary to the people who believe this, I feel that GitHub's Copilot is not breaking any legal rules.

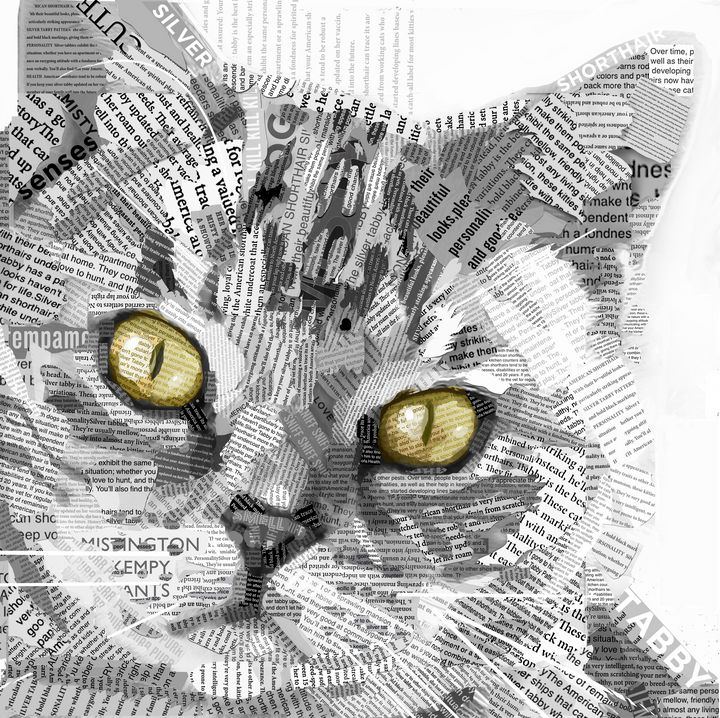

To think about Copilot’s structure and purpose more generally, consider a piece of collage art.

Finally, from a computer programmer’s perspective, consider everyone’s favorite website: stackoverflow.com. According to this article from Ictrecht, on Stack Overflow, small code snippets that any two programmers could come up with because of its simplicity are not copyright protected. It can be argued that most of the code snippets that Copilot uses can be thought up by anyone, and thus there should not be any legal issues with using it to train Copilot.

In all, the controversy of Copilot demonstrates to us how the legal ambiguity of AI programs may be difficult to work out. Perhaps in the future, a new license that considers the nature of AI in particular may be implemented and popularized, in order to clarify some legal ambiguity. For now, it appears that we will have to assess situations like this on a case by case basis.

End.